In recent years, law enforcement agencies have increasingly deployed technological solutions to prevent and solve gun crimes. Agencies and elected officials have long recognized the need for rigorous evaluations of these technologies. However, what is often overlooked is a clear-eyed statement of what a successful or unsuccessful program looks like. Instead, evaluation criteria are often driven by the availability of data rather than the thoughtful assessment of outputs and outcomes, which are also often overlooked. Without clear articulation of expected changes in outcomes, the evaluations become an exercise in interpreting scattershot analyses that were conducted because they were possible, rather than because they were needed or provided worthwhile evidence on the system’s effectiveness.

In this post, we describe what a successful technology evaluation strategy would look like. Although the general ideas we illustrate are broadly applicable, we focus this discussion on acoustic gunshot detection (AGSD) systems. AGSD systems triangulate the location of a gunshot by using a series of microphones mounted in areas throughout a city. These microphones pick up on the sound produced by a gunshot, and are subjected to a combination of automated software and human review to determine whether the triggering event was caused by a gunshot. Events determined to be caused by a gunshot are then forwarded to a law enforcement agency for response.

One reason why agencies might find AGSD systems appealing is that establishing where, and if, gunfire occurs has historically been difficult for law enforcement agencies. If agencies are unable to identify or locate gunfire incidents, it will likely have a cascading effect on crime and community perceptions. For example, when gunshots go undetected, gun crimes go unsolved, and low rates of detection and case clearances reduce the perceived risk (i.e., general deterrence) of committing future gun crimes that can lead to additional gun crimes. This snowball effect also poses problems for the trust that the community places in law enforcement agencies to do this type of work.

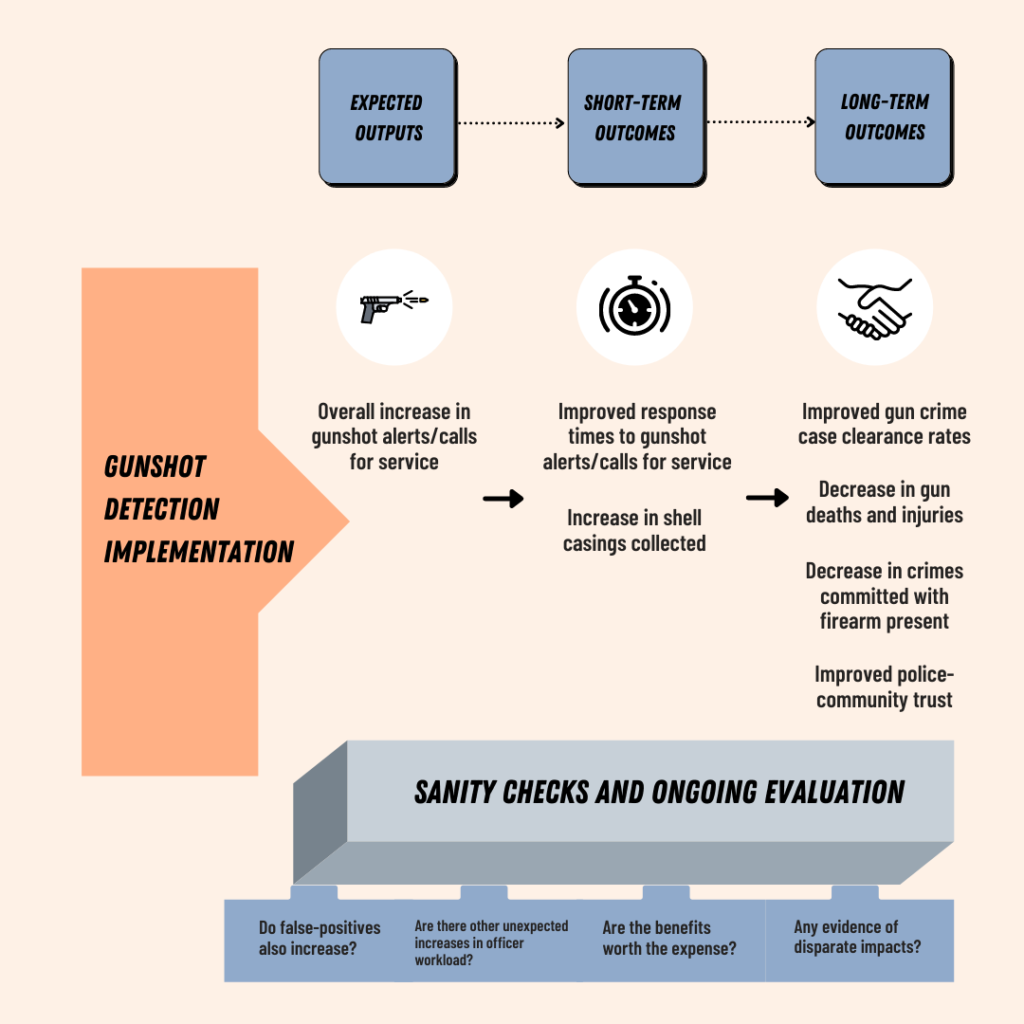

This hypothesized feedback pattern provides critical insight into how an evaluation strategy could be developed. This pattern of responses to changes in gun crime can also provide an appropriate outline for what we would expect a successful technological intervention to look like. Figure 1 illustrates a full logic model for our expectations once an AGSD system has been implemented. If read left to right, the figure illustrates expected outputs of the implementation, short-term outcomes, and long-term outcomes. At the bottom of the figure are a series of questions that should be considered in addition to the main question of whether the system has worked to improve the meaningful outcomes.

Figure 1’s expected outputs describe those things that could be considered as mechanical changes that occur as a function of the system. For instance, adopting an AGSD system should lead to an increase in the number of alerts or calls for service (CFS) because the AGSD system is always on, and can detect gunshots in areas where there are few people or during times when residents are not present or may be sleeping. These change outputs should be thought of as a quality check that the system is functioning at a basic level.

The short-term outcomes describe those things that we expect a fully functioning system to change immediately. Given that AGSD technology is largely marketed as a method that allows for faster response times and better detection of events, this implies that if the system is functioning at a basic level, the first and most proximal test of the system’s effectiveness is whether officers can quickly and accurately locate places where gunshots occur. If officers can do this as the result of a functioning system, then we can observe immediate improvements to response times and to the evidence collected. An AGSD system that achieves these objectives is meeting the advertised objectives of implementing the system.

However, it is crucial to acknowledge that agencies do not deploy AGSD systems because they view improved response times and increased forensic evidence collection as the final objective. These metrics are only important when they lead to improvements in long-term outcomes that contribute to public safety. There are many examples of outcomes that fit this description, but a good evaluation focuses on those that are closely associated with short-term outcomes. The more distal the association between the long- and short-term outcomes, the less likely the AGSD system will be able to affect it, and the more difficult it will be to detect any effects. Some examples of long-term outcomes that are closely linked to AGSD performance are improved gun crime clearance rates, and decreases in gun deaths and injuries. More distally linked, but still suitable for study are whether guns are involved in lower proportions of crimes, and whether there are improvements in building community-police trust.

It is also worth considering how one might interpret a series of findings that indicates a AGSD system has no effects on short-term outcomes, but the evaluation nonetheless finds changes in long-term outcomes. This may imply that the intervention is having effects through mechanisms other than faster response times and improved evidence collection, or it could imply that something other than the AGSD system is having an effect on the long-term outcomes. Careful analysis may be able to rule out some of these possibilities, but in either case, it is probably wise to be more circumspect with respect to the AGSD system’s effects.

Finally, it is important to gain a full understanding of all potential changes as a result of implementing technological solutions to gun crime. Conducting sanity checks and ongoing evaluation is crucial for making informed decisions about the value of implementing these systems.For instance, an AGSD system could lead to an increase in gunshot alerts/CFS by registering errors produced by loud stereos, cars backfiring, or heavy machinery. It is unlikely that such a system would prove to be particularly useful. Other checks an evaluation might consider are whether there are further, unexpected increases in officer workload, whether the system is leading to differential impacts on different communities, and whether the expense of the system is justified by its benefits? For instance, could an agency achieve similar improvements to public safety by simply hiring an additional officer instead?

Broadly, we think this is an effective model to use when evaluating AGSD systems or other technological solutions. The specific metrics used, and even the ability to look at all dimensions we have described, will vary from evaluation to evaluation depending on what data sets are available, the terms of the evaluation, and how the implementation was rolled out. In outlining a logic model, describing the metrics used at each stage, and the specific analytic approach to be used, agencies and evaluators can be clear about whether and how AGSD systems are working as expected, and if their costs are justified by the observed benefits.

In our next post, we will describe an evaluation of an AGSD system that was implemented in the City of Wilmington, DE.